An important concept for many machine learning algorithms, specifically supervised algorithms is the concept of error calculations, or how far off from an ideal solution the current algorithm is. Error calculations are used to determine when a given solution is close, or close enough as achieving a 100% is unlikely to be found. This page will look at several examples of error calculation: Sum of Squares Error (SSE), Mean Square Error (MSE), and Root Mean Square Error (RMS).

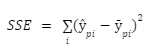

Sum of Squares:

The Sum of Squares is given by the following equation:

Where ŷ is the ideal output and ȳ is the actual output.

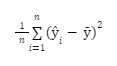

Mean Square Error:

Where ŷ is the ideal output and ȳ is the actual output.

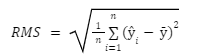

Root mean Square:

Where ŷ is the ideal output and ȳ is the actual output.

For the most part each of the error methods presented can be used interchangeably and it is often recommended that during algorithm development each is utilized to compare the outputs and impacts on the training of the algorithm.